AI judges of beauty contest branded racist

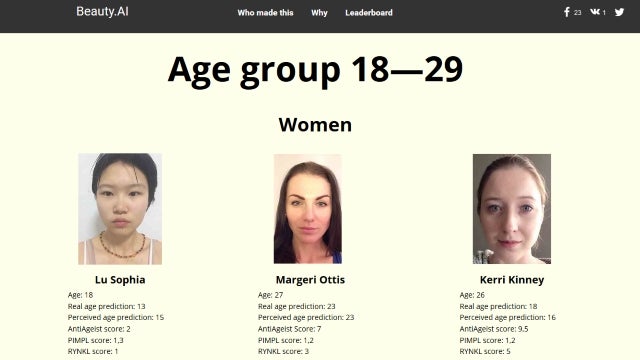

It’s not the first time artificial intelligence has been in the spotlight for apparent racism, but Beauty.AI’s recent competition results have caused controversy by clearly favouring light skin.

The competition, which ran online and was open to men and women around the world of all ages, ended with almost entirely winners with white skin, leading to the cries of robot racism.

However, given that AI is only as smart as the data sets it’s trained on, that could be overstating the point. Robots have no way of being inherently racist. An alternate headline could be ‘Failed research methodology leads to biased results’, but that’s hardly as compelling reading.

Indeed, Alex Zhavoronkov, Beauty.AI’s chief science officer said the results were a lack of ethnic minorities in the training data set. This meant that although light skin wasn’t defined as part of beauty, the AI drew the association anyway.

That oversight, or shortcoming, of the research meant that despite the whole aim of the competition being to eliminate human bias by judging on specific criteria, and it still crept in anyway.

Earlier in the year, an AI Twitter chatbot created by Microsoft called Tay had to be taken offline after just 24 hours after being taught to become a sex-obsessed racist.

Clearly, we need to find a way to train AI more robustly.

Related: Microsoft apologises for AI Twitter chatbot’s neo-Nazi, sex-mad tirades

Watch: iPhone 7 vs Galaxy S7

Do you think robots can be racist, or do you think it’s always bad data provided by humans? Let us know in the comments below.