Zotac nVidia GeForce GTX 275 Amp! Edition Review

Zotac nVidia GeForce GTX 275 Amp! Edition

Ed finds out how nVidia's GeForce GTX 275 compares with yesterday's AMD ATI Radeon HD 4890.<br />

Verdict

Key Specifications

- Review Price: £252.99

I was tempted to start this review with a full-blown rant about why it is that two manufacturers chose to launch their new products on the same day, giving me very little time to test and evaluate them. However, that’s probably just my grouchy state from having been up until 4am last night doing the testing. The fact of the matter is, you don’t care, and that’s cool, I totally understand; both nVidia and AMD have released brand new graphics cards and all you want to know is which one to buy. So, yesterday I looked at AMD’s ATI Radeon HD 4890 and now it’s the turn of nVidia’s GeForce GTX 275 or in our particular case Zotac’s overclocked version thereof.

In a nutshell, internally this card is essentially a GTX 285 with less memory and correspondingly a cut-down memory interface. By default it also has lower clock speeds but considering so many cards are released pre-overclocked this is perhaps a moot point. At default clock speeds, though, the GTX 275 has a maximum pixel fillrate of 17.7Gpixels/sec and memory bandwidth of 127GB/s, some 14 per cent and 20 per cent lower than the GTX 285, yet 30 per cent and 5 per cent higher than ATI’s HD 4890, respectively. As always, though, the true performance cannot be gleaned from these theoretical numbers but rather from how well the GTX 275 performs in real world tests, which we will be looking at later on.

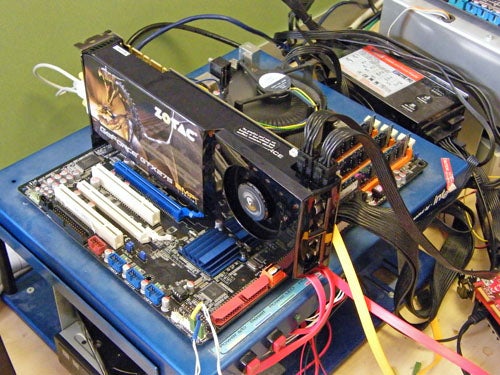

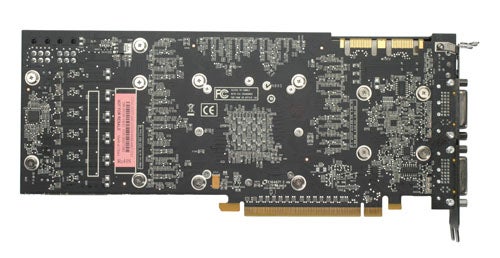

The card itself is very similar to the GTX 285, being 10.5 inches long with a dual-slot cooler design, and an undeniably cool combination of matt black PCB and gun-metal grills. The extra inch in length over ATI’s HD 4890 isn’t just academic, though, as it translates to the card overhanging most standard ATX motherboards by an inch. For most enthusiast cases this shouldn’t be a problem but it’s something worth checking before you opt for this card.

Outputs are the standard selection of two dual-link DVI sockets accompanied by an analogue output for component, S-Video, and composite. Unfortunately, Zotac doesn’t include the appropriate dongles for the former and latter of these, leaving just S-Video as an out-the-box analogue option.

When the card is idling, the cooler is noticeably quieter than that of ATI’s latest cards and the card feels cooler as well. It’s not a dramatic difference but it’s definitely there, and this is despite our card coming pre-overclocked. Meanwhile when going at full pelt, they’re all just as loud as each other. However, nVidia or Zotac should, perhaps have rejigged the card’s automatic fan controller to run even faster because we experienced regular stability problems due to the card overheating when under heavy load.

Using nVidia’s performance tool, in its drivers, to reduce the clock speeds back to default, the crashing stopped but here we were stuck with having to keep the fan at a constant speed (as that’s all the tool allows when ‘overclocking’). Adding a relatively slow and quiet fan blowing onto the back of the card also resolved the crashing issue so if you have good case ventilation you may not have problems but, when overclocked, this certainly isn’t a card to cram inside a confined space.

As we would expect at this price/performance level, two extra power connectors are needed to keep (or indeed get) the card running so you’ll need a decent modern power supply. Meanwhile, the usual SLI connector points are also present along the top edge. Unfortunately no SLI bridges are included in the box.

If you choose to buy this particular Zotac GTX 275, one potential highlight is a free copy of one of our favourite racing games, Race Driver: GRID. As well as this you also get a full copy of 3DMark Vantage, so you can easily test your graphics card’s performance. There are also two 4-pin to 6-pin power adapters, an SP/DIF audio connection for passing audio from your sound card out through the DVI socket from where it can be transported along an HDMI cable using the included DVI-to-HDMI adapter. And finishing things off is the venerable DVI-to-VGA adapter.

”’A word on CUDA”’

One of the key differentiators between nVidia and ATI graphics cards at the moment is nVidia’s undeniably more mature and widely adopted platform for using the processing power of graphics cards for other things besides 3D games. The most prominent of these applications has been for offsetting the physics calculations used by the PhysX game engine onto the GPU. This results in better performance in games that support it.

(centre)”’The glass and fabric effects in Mirror’s Edge definitely add to the experience but alone are not enough to convince us to buy one graphics card or another.”’(/centre)

All well and good, except very few titles yet support this and, of those that do, few use it to the extent that it makes a discernible difference to gameplay. The one major exception so far is Mirror’s Edge where the constant running around glass fronted buildings provided the opportunity for the advanced glass breaking effects to regularly shine through. However, this alone would not be enough for us to recommend an nVidia card over an ATI one. We need a regular stream of quality titles that rely on this tech before we feel this one feature would make up for any 3D processing performance deficits.

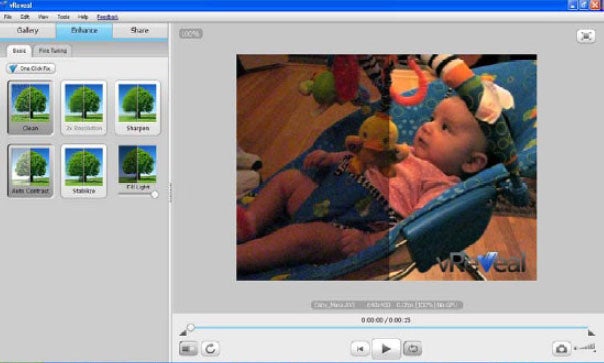

(centre)”’MotionDSP’s vReveal offers impressive and easy to use video enhancements made quicker by GPU acceleration.”’(/centre)

Likewise, a few token video conversion and processing tools in the form of Badaboom and vReveal are not going to persuade us that CUDA is the be all and end all. Particularly because these programs aren’t even free. Sure, nVidia has support from the highly regarded TMPGEnc video transcoder but then Cyberlink’s Power Director demonstrates a more likely long term trend in that it supports both CUDA and ATI Stream. Don’t get us wrong, we’re not dismissing these value-added features outright but we just don’t feel there’s enough here to influence a buying decision for something that is going to spend the vast majority of its time doing other things (namely processing 3D graphics scenes).

”’Test System”’

* Intel Core i7 965 Extreme Edition

* Asus P6T motherboard

* 3 x 1GB Qimonda IMSH1GU03A1F1C-10F PC3-8500 DDR3 RAM

* 150GB Western Digital Raptor

* Microsoft Windows Vista Home Premium 64-bit

”’Cards Tested”’

* Zotac GeForce GTX275 Amp! Edition

* nVidia GeForce GTX 275 (Zotac GeForce GTX275 Amp! Edition running at nVidia reference clock speeds)

* nVidia GeForce GTX 260

* ATI HD 4890

* ATI HD 4870

”’Drivers”’

* ATI HD 4890 – Beta Driver

* Other ATI cards – Catalyst 9.2

* nVidia cards – 182.08

”’Games Tested”’

* Crysis

* Race Driver: GRID

* Call of Duty 4

* Counter-Strike: Source

While it hasn’t been a huge commercial success and its gameplay is far from revolutionary, the graphical fidelity of Crysis is still second to none and as such it’s still the ultimate test for a graphics card. With masses of dynamic foliage, rolling mountain ranges, bright blue seas, and big explosions, this game has all the eye-candy you could wish for and then some.

We test using the 64-bit version of the game patched to version 1.1 and running in DirectX 10 mode. We use a custom timedemo that’s taken from the first moments at the start of the game, wandering around the beach. Surprisingly, considering its claustrophobic setting and graphically rich environment, we find that any frame rate above 30fps is about sufficient to play this game. All in-game settings are set to high for our test runs and we test with both 0xAA and 2xAA.

First things first, it’s clear that overclocking this card provides a decent boost in performance. Indeed if you compare the average cost per fps – in this title – of the Zotac and reference speed card it is almost exactly the same. In other words, you’re not paying a premium for buying the overclocked model and you’re maintaining your warranty. Compare the GTX 275 to the HD 4890 and we have a very even fight, with the GTX 275 just edging it on average.

Race Driver: GRID is the newest game in our testing arsenal and it’s currently one of our favourites too. Its combination of arcade style thrills and spills with a healthy dose of realism and extras like Flashback makes it a great pick-up-and-go driving game. It’s also visually stunning with beautifully rendered settings, interactive crowds, destructible environments, and stunning lighting. All that and it’s not the most demanding game on hardware, either.

We test using the 64-bit version of the game, patched to version 1.2, and running in DirectX 10 mode. FRAPS is used to record frame rates while we manually complete one circuit of the Okutama Grand Circuit, in a Pro Tuned race on normal difficulty. We find a frame rate of at least 40fps is required to play this game satisfactorily as significant stutters can ruin your timing and precision. We’d also consider 4xAA as a minimum as the aliasing on the straight lines of track, barriers, and car bodies is a constant distraction. All in-game settings are set to their maximum and we test with 0xAA and 4xAA.

It’s a case of more of the same with regards the overclocked Zotac card but, overall, the GTX 275 doesn’t hold up to the competition from the HD 4890. This round goes to AMD.

What can we say about Counter-Strike: Source that hasn’t already been said before? It is quite simply the benchmark for team-based online shooters and, five years after its release, it’s still one of the most popular games in its genre. It focuses on small environments and incredibly intensive small-scale battles with one-shot kills the order of the day. If you want to test all elements of your first person shooter skills in one go, this is the game to do it.

We test using the 64-bit version of the game using a custom timedemo taken during a game against bots on the cs_militia map. This has a large amount of foliage and is generally one of the most graphically intensive maps available. We find a frame rate of at least 60fps is required for serious gaming as this game relies massively on quick, accurate reactions that simply can’t be compromised by dropped frames. All in-game settings are set to their maximum and we test with 0xAA 0xAF, 2xAA 4xAF, and 4xAA 8xAA.

There’s not much to say here. All the cards are CPU (or other factors) limited throughout all our tests in this game.

Call of Duty 4 has to be one of our favourite games of 2007. It brought the Call of Duty brand bang up to date and proved that first person shooters didn’t need to have the best graphics, or the longest game time. It was just eight hours of pure adrenaline rush that constantly kept you on edge.

We test using the 64-bit version of the game patched to version 1.4. FRAPS is used to record frame rates while we manually walk through a short section of the second level of the game. We find a frame rate of 30fps is quite sufficient because, although the atmosphere is intense, the gameplay is less so – it doesn’t hang on quick reactions and high-speed movement. All in-game settings are set to their maximum and we test with 0xAA and 4xAA.

The advantage waxes and wanes throughout these tests but there’s no doubt the HD 4890 still holds a distinct lead over the GTX 275. Quite simply, this is another round to ATI.

There was little difference in power consumption when running this Zotac card at default clock speeds and underclocked reference speeds, so we’ve recorded a single figure for them both. We take the reading from a wall socket reader that checks the complete system power.

Clearly, nVidia’s hard work to massively reduce idle power consumption for the original 200 series chips hasn’t been undone with this latest incarnation. Bearing in mind our earlier observations that the GTX 275 also runs quieter than the HD 4890 when idling, it’s a clear choice which way to go if you tend to leave your PC on for long periods of time. While this trend is reversed when the cards are under load, the difference is much less so wouldn’t sway our decision either way.

As well as underclocking this Zotac card we also had a go at overclocking it further and had a little bit of success. In fact, we pushed the core clock to 721MHz without too much trouble, making for a total overclock of 88MHz (over default speeds). This works out as a 14 per cent increase, which is near enough the 15 per cent (850MHz to 975MHz) we achieved with the HD 4890.

”’Verdict”’

On the evidence we’ve gleaned, both the nVidia GeForce GTX 275 and the AMD ATI Radeon HD 4890 hold a clear advantage over what came before and are great value products, whichever you choose. As for ”which” of the two to go for, that’s less clear cut.

If you tend to leave your computer on for long periods of time when not gaming, you may appreciate the lower idle power of the GTX 275. Likewise, the wider software support for CUDA, over ATI Stream, may attract you to side with nVidia, especially if you work with video a lot – be it editing or trans/encoding. However, based on the games we’ve tested, ATI has the faster gaming card overall and for us that’s still the most important consideration.

Trusted Score

Score in detail

-

Value 8

-

Features 7

-

Performance 7