Opinion: Why the iPhone 11 Pro’s Deep Fusion tech could make it a Pixel 4 rival

The cameras on Apple’s iPhones have consistently lagged a few steps behind their rivals for years now – and the ‘crank it up to 11’ hype around the iPhone 11 Pro’s new photographic powers acknowledged that.

So now yesterday’s reality distortion field has worn off, has Apple really managed to bridge the photographic gap between its iPhones and the likes of Google’s Pixels?

I think there’s a good chance it has, and the main reason is that its ‘Deep Fusion’ (and to a lesser extent, ‘Night Mode’) mean it’s finally taking Google on at its own computational photography game – with the added help of a couple of Apple’s traditional advantages.

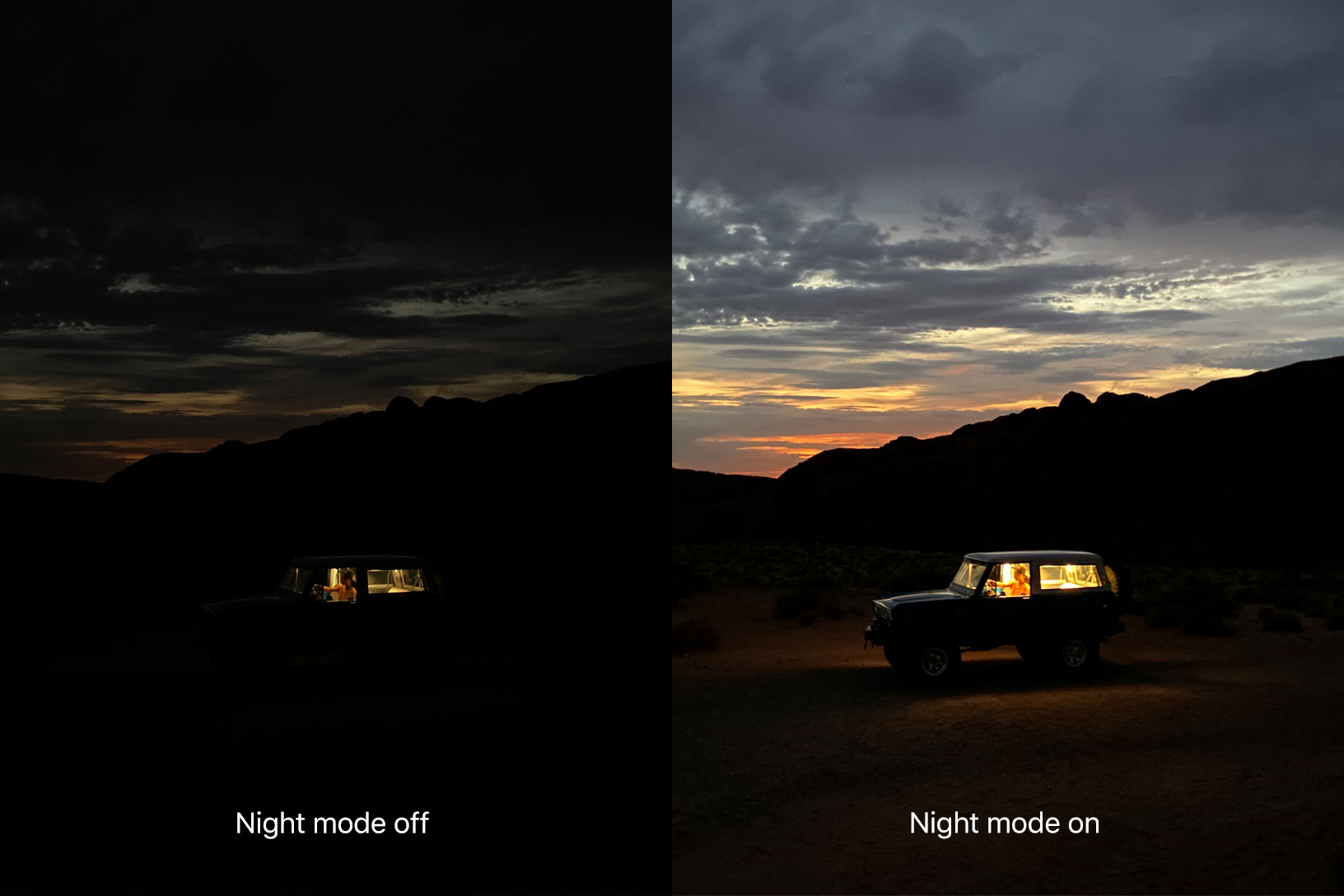

Of course, that long overdue ‘Night Mode’ is simply a case of Apple belatedly trading chess pieces with Google’s ‘Night Sight’. Available on all its new iPhones, including the cheaper iPhone 11, this mode appears to work in an identical way to the Pixel 3’s, fusing multiple images together to brighten night scenes and reduce noise.

Apple’s Night Mode also uses adaptive bracketing, which means it’ll reduce each frame’s exposure time if it senses motion in the shot. This means that, like on the Pixel 4, it could well be a useful mode outside away from night scenes – the recognisable, super-clean look of Google’s ‘Night Sight’ has become increasingly common as people have learned to love its versatility outside of pitch black scenes.

Related: iPhone 11 Pro and iPhone 11 Pro Max: Release date, price and specs

Deep Fusion: How it works

But Night Modes are nothing new. The more exciting feature is undoubtedly ‘Deep Fusion’. Granted, it’s another ludicrous Apple marketing term to file alongside Retina displays, and will only be available with a later firmware update. Apple’s (relatively sparse) description of how it works, though, does suggest it could yet help iPhones at least close the gap on their Google and Huawei rivals.

‘Deep Fusion’ isn’t another ‘Night Mode’ (as we’ve seen, Apple already has that). Instead, it sounds much more like the super-resolution technique Google has used before in its Super Res Zoom. That doesn’t mean Apple will be using it for zoom – it already has a 52mm telephoto camera for that – but instead for creating high-quality, high-resolution 24MP images that its sensor simply can’t produce on its own.

The iPhone 11 and iPhone 11 Pro’s Night Mode works in a similar way to Google’s Night Sight, and isn’t as exciting as Deep Fusion

So how does Deep Fusion work? Much like Google’s super-resolution technique, it uses a powerful image processing pipeline to combine the benefits of HDR with the Pixel Shift trickery seen on larger cameras like the Sony A7R IV. The result, in certain situations, should theoretically produce the best iPhone photos yet, and some of the best we’ve seen from a smartphone.

Like on the Pixel, ‘Deep Fusion’ will see the iPhone 11 Pro constantly buffering several images in anticipation of you pressing the shutter, tossing aside unused ones to make room for new frames. The Pixel’s HDR+ mode currently buffers up to 15 images, while the iPhone 11 Pro will instead do this with nine – this will, according to Apple, be comprised of four short images, four ‘secondary’ images, plus your final shutter press.

So far, so Smart HDR, albeit with twice the number of buffered images. But where Deep Fusion takes things up a notch is with the final shutter press, which is one longer exposure, and the way the photo is then assembled.

It isn’t yet clear whether Deep Fusion will be available on all three of the iPhone 11 Pro’s cameras, or just the main 26mm one.

Firstly, unlike Night Modes, Deep Fusion will apparently be a real-time process. Thanks to the relatively short shutter speeds of the buffered frames, you shouldn’t need to hold still and wait while the camera bursts. There’ll just be the longer exposure time when you press the shutter, plus the one second its neural engine will take to process it.

The latter is also another key difference from HDR – the iPhone 11 Pro won’t just be averaging out the collected frames, but rather assembling a larger 24-megapixel image pixel-by-pixel, presumably with the help of OIS, “to optimise for detail and low noise”.

Exactly how it’ll do this isn’t clear, but it could well use the Google technique of breaking each frame up into thousands of tiles, aligning them, then doing some extra processing on top. This would mean slight movements in your scene shouldn’t appear as a blur, making it a more versatile mode rather than just a trick shot.

Either way, the reason why Deep Fusion is a big deal is because it’s not just a niche sub-feature like Apple’s Portrait lighting – it’s an entire image processing system, that will likely combine the powers of OIS, the A13 Bionic chip, exposure stacking and Google-style machine learning (plus, perhaps even all three lenses, as has been rumoured) to help bridge the gap to the incoming Pixel 4.

Related: The Pixel 4 needs this camera feature – and it has nothing to do with photography

Fusion reaction

So what situations will Deep Fusion be useful for? The sample portrait shot Apple used (below) contained “low to medium light”, and this seems the best scenario for it – not so dark that you’ll need Night Mode, but challenging enough that you’ll see the real benefit of all that processing and get a larger 24-megapixel shot in the process. Think people portraits, architecture and morning or dusk landscapes.

This means Apple might put Deep Fusion as an ‘on/off’ option in the camera menus (with a warning about the extra storage its 24-megapixel shots will need) or, like Google, make it one of the main camera modes. We’ll find out “this Fall”, which is Apple’s release date for when Deep Fusion will arrive on the iPhone 11 Pro and Pro Max as a software update.

This photo in “low to medium light” was shot using Deep Fusion, and apparently shows the level of detail we can expect from its 24-megapixel images

The delay and Apple’s vagueness around Deep Fusion is undoubtedly related to the imminent announcement of the Pixel 4, which will arrive in mid-October 2019. Why show all your cards only to get trumped by Google’s new “computational mad science”, as Apple smugly called its new camera feature?

But there’s enough promise in the details of Deep Fusion to suggest it’s going to, if not exactly leapfrog its rivals, at least allow the iPhone 11 Pro to draw level with them in lower light stills, while retaining its lead in video.

Apple has rarely been first with any new tech. What it usually does is refine existing things – as it did with Portrait mode on the iPhone 7 Plus – then release them when they’re truly ready for prime-time, while crowing about how they were first all along.

Google is still in the box-seat when it comes to computational photography, but with Deep Fusion it looks like Apple is finally preparing to fully commit to the party. With a strong platform in the form of the A13 Bionic chip, and the promise of apps like Filmic Pro and Halide tapping into its photographic powers, the iPhone 11 Pro might finally see Apple catch up it with rivals like the incoming Pixel 4.