Instagram plotting to ban more people, but offenders will be warned

Instagram has announced plans warn users that their account may be disabled before dropping the mighty ban hammer.

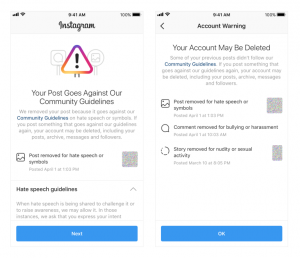

The changes to the moderation policy will see users informed when a post goes against the community guidelines and will make clear the account could be subject to deletion if they reoffend.

The Account Warning system keeps track of the posts and comments that have been removed for reasons like hate speech or symbols, bullying or harassment, or for nudity or sexual activity. The company may also go into more detail on its guidelines on hate speech and such, in an attempt to educate the users.

This will likely solve the issue where offenders open the app, only to find their account has been deleted along with posts, archives, messages and followers. It appears what Instagram is getting at here is that it’ll be deleting more accounts, but will be more up front about the reasons and will give users ample opportunity to resolve their behaviour. That plays out with the introduction of an appeals process pertaining to deleted content.

Related: Delete Instagram: How to remove your account

Instagram says accounts that rack up a certain percentage of posts containing violating content, as well as those who rack up a certain violations in a certain period of time, will be subject to the bans.

Image credit: Instagram

In a blog post, the Facebook-owned company wrote: “To start, appeals will be available for content deleted for violations of our nudity and pornography, bullying and harassment, hate speech, drug sales, and counter-terrorism policies, but we’ll be expanding appeals in the coming months. If content is found to be removed in error, we will restore the post and remove the violation from the account’s record.”

The social network says the change will help it enforce policies more consistently, and hold users accountable for what they post on Instagram. Will it work? Let us know @TrustedReviews on Twitter.