Google’s fix for its ‘racist’ Photos app couldn’t be clunkier if it tried

Google is having trouble elegantly removing the seemingly accidental ‘racism’ its Photos service has stumbled into.

Back in 2015 Jacky Alcine, a black software developer, tweeted at Google to point out that its machine learning-powered photo labelling tech had automatically categorised a photo of two black people as ‘gorillas’.

Google apologised for the racist gaff its service has committed and said it was appalled and would take action to fix the problem.

Google Photos, y’all fucked up. My friend’s not a gorilla. pic.twitter.com/SMkMCsNVX4

— Jacky Alciné (@jackyalcine) June 29, 2015

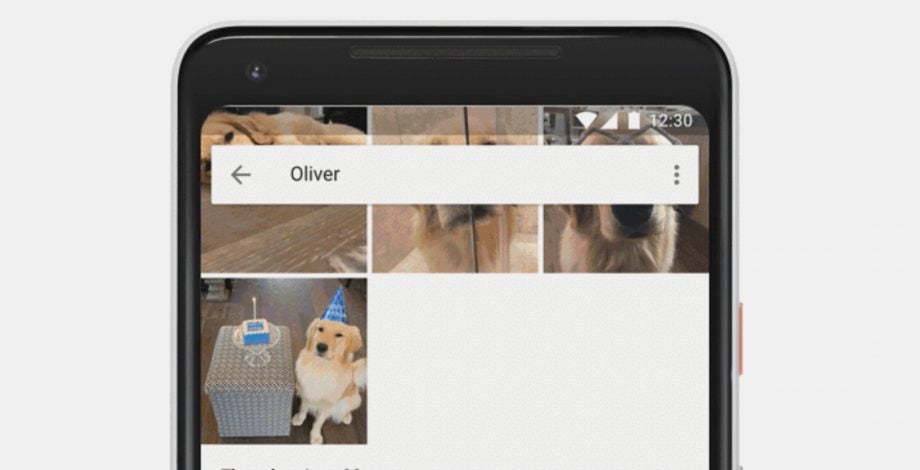

Roll on two years down the line and it appears Google’s solution to the problem is very clunky from a tech giant that produces fairly slick software these days.

To get around any potential racist categorisation, it appears that Google stripped the ‘gorillas’ term from the words the Photos service has to categorise photographs.

Wired conducted a series of tests to discover that despite searching for gorillas and other primates in a photo library well-stocked with animal images, the service threw up no results for terms like ‘gorilla’ and ‘monkey’.

The testing also found out that some types of monkey could be surfaced in Google Photos providing the term ‘monkey’ wasn’t used as a suffix.

Equally problematic was a third wave of testing that suggests the Google’s image recognition tech is having trouble finding accurate pictures to fit the search terms of ‘black woman’, ‘black man’ and ‘black person’. Wired noted the images that were served up were black-and-white images, with only the terms ‘afro’ and ‘African’ returning pictures of black people.

A spokesperson from Google then confirmed to Wired that the some terms relating to monkeys and apes were purged from Photos, noting: “Image labelling technology is still early and unfortunately it’s nowhere near perfect.”

Google’s fix may have been fairly inelegant, but the whole situation highlights how even some of the smartest technology is still far from infallible and there’s a long way for machine learning to go before it can apply common sense.

And given the smart features in Photos are trained on masses of images, many of which are taken in less than optimal conditions, it’s arguably not surprising that the service can trip over itself.

However, with the limitations the technology currently faces it’s no surprise Google has taken a heavy-handed approach to preventing Photos from offending anyone else or developing an unintentional racist bent to its algorithms.

Related: CES 2018 highlights

How should Google prevent its machine learning tech from being accidentally racist? Have your say by tweeting @TrustedReviews or get in touch on Facebook.