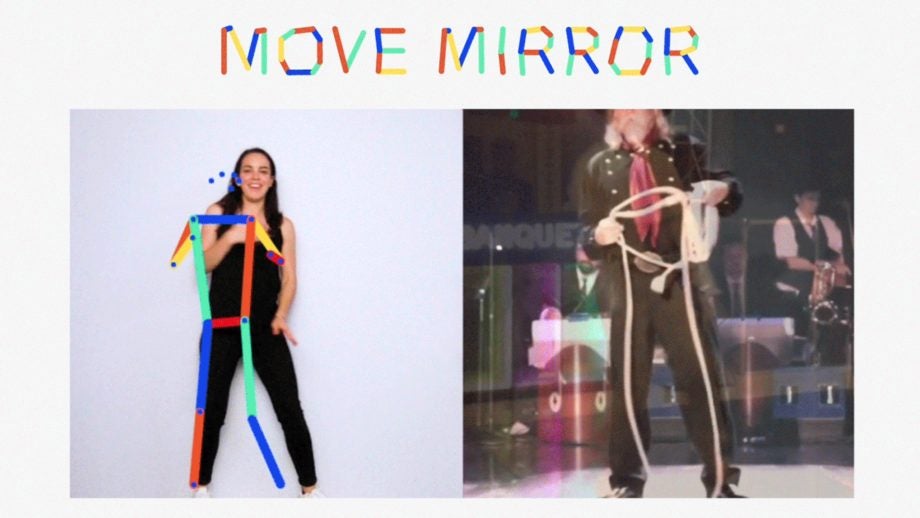

Google’s Move Mirror is probably the coolest thing you’ll see today

Google has launched an experimental ‘Move Mirror’ project which aims to instantly match your poses with an images from its vast library.

The Chrome browser-based tool captures your movements in real-time and uses machine learning to locate the closest approximation from over 80,000 Google Images entries.

Those taking the Move Mirror for a spin will even be able to create a shareable gif of the footage. In our experience it isn’t quite as impressive as the below as the above, but that may be dependent on light, the background, the quality of the webcam and the speed of the internet connection you’re using.

The fun experiment uses a Google-made open source ‘pose estimation model’ called PoseNet. This identifies where the key body joins are to create a limited motion capture tool. The whole thing is powered by Tensorflow.js, which brings machine learning directly to your browser rather then sending them or storing them on a server.

Google says it hopes the experiment will inspire coders to play around with the tech and make machine learning more accessible.

In a blog post on Friday Google writes: “With Move Mirror, we’re showing how computer vision techniques like pose estimation can be available to anyone with a computer and a webcam. We also wanted to make machine learning more accessible to coders and makers by bringing pose estimation into the browser—hopefully inspiring them to experiment with this technology.”

Have you tried out the Move Mirror experiment? Share your thoughts with us @TrustedReviews on Twitter.