nVidia GeForce 7950 GX2 Review

nVidia GeForce 7950 GX2

If you haven't got an SLI capable motherboard, then this dual GPU card is worth considering.

Verdict

Not too long ago, manufacturers such as Gigabyte and Asus made graphics card that took two nVidia chips and put them on to one card. My personal feeling on these is that they were pretty pointless as though you still needed an SLI motherboard you could only use one at a time, yet they cost the same as buying two cards. In the case of the Gigabyte, you even had to use a specific motherboard too.

So when nVidia launched the GeForce 7950 GX2, I was instantly concerned as to its application.

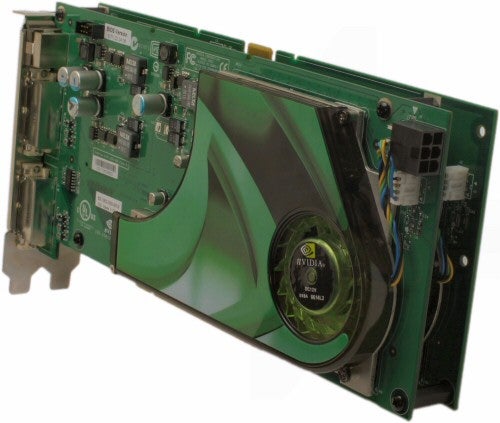

As you can see from the image above, this card is pretty much two PCBs bolted to together to make a single card. It uses one PCI-E slot and needs only a single power connector.

Above, you can see what the card looks like when pulled apart. There’s a small connecting PCB that bridges the two ‘cards’ together. Each PCB has a GeForce 7900 GT core, running at 500MHz instead of 450MHz, but with the slightly slower running memory of 600MHz instead of 660MHz. The memory modules are the same 1.4ns Samsung modules, which are in theory rated to 715MHz. So one has to question the motive of underclocking these by 115MHz (or 230MHz when taking DDR in to account).

What first struck me about this card is how amazingly quiet it is. While I was comparing it to two 7900 GTs, the difference was astonishing. Despite this reduction in both noise and air flow, the temperature of the card seemed fine. When overclocking, it did get a little on the hot side, but I’d sooner hold off the clock speeds a little to save myself a noise related headache.

The chip in the above picture is what enables this card to work. It is a PCI Express to PCI Express Bridge and is fully compliant with the PCI Express standards. This means it should work in any motherboard, SLI ready or not. Some boards may require a BIOS update as they aren’t used to seeing such a device, but we didn’t have an issue with our Asus A8N32-SLI. This chip has 48 lanes – 16 lanes connecting to the motherboard slot and then 16 lanes replicated to each chip containing PCB.

Inevitably, this means that the card is bottlenecked by the 16 lanes of the PCI-E slot, but by each PCB have 16 lanes each it means that if running in a game that doesn’t support SLI, the first PCB will get full use of the bandwidth. It also means communication between the cores should have little or no latency.

However, the fact that this card uses only one slot, doesn’t require an SLI motherboard and is detected as one GPU by the operating system doesn’t mean it’s a single GPU. SLI technology is still at work and requires an SLI profile to be in place in order to gain full performance. nVidia has made quite a point of advertising this as a single GPU, replacing SLI on/off with “Dual Display” and “Dual GPU” modes. We couldn’t find anything to do with SLI profiles in the drivers used (91.29), which meant we couldn’t specify any manually.

For this reason, I find it a little misleading to advertise this card as a 48 pipeline card with a 1GB frame buffer. I must emphasise, this is a two 24 pipeline part, each with a 512MB frame buffer. As with the 7900 GT, each core has 16 pixel output engines and eight vertex shaders.

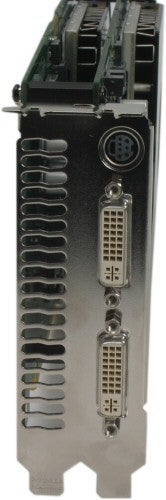

Unlike any other reference card to date, this card is fully HDCP compliant, which is great news. There are two dual-link DVI connectors. However, just as with SLI, you can’t run dual displays while SLI, or “Dual GPU” mode is enabled. To use two displays, you must use “Dual Display” mode, or in the case of SLI, turn off SLI. This is a complete annoyance. I don’t understand how nVidia can create something like TurboCache, which will only use system memory when a 3D application is loaded, but they can’t apply a similar principle to SLI.

As you can see above, it’s a dual-slot card – not surprising really. But unlike SLI, you have only two DVI connections. This means you can only use two displays, unlike the four displays available when running two cards in a non-SLI display mode. This isn’t a huge draw back really and there are many ways around this problem should you need more displays.

Anyone with a keen eye will have noticed the SLI connector on top of the card. This card will be used in OEM supported Quad SLI machines. However, it is not currently supported for anyone who wants to build their own and drivers for this won’t be available for some time. This is a clear upgrade path though, for anyone who knows what they are doing. Previous Quad SLI implementations have been a little on the poor side, so I would like to see a decent setup before I suggest this as upgrade path – but the option is still there.

The nVidia cards were tested on an Asus A8N32-SLI using an Athlon 64 FX-60, 2GB of CMX1024-3500LLPRO RAM and a Seagate Barracuda ST340083A8 hard disk. Power was supplied by a Tagan 900W TG900-U95. For ATI testing, everything was kept the same except for the use of an Asus A8R32-MVP Deluxe and an Etasis 850W ET850.

All of the nVidia cards were tested using the beta 91.29 ForceWare drivers. The 7900 GTs running in SLI at standard clock speeds were tested using the slightly older 84.17. The X1900 XTX were tested using the official Catalyst 6.5 drivers.

Using our proprietary automated benchmarking suite, aptly dubbed ‘SpodeMark 3D’, I ran Call of Duty 2, Counter Strike: Source, Quake 4, Battlefield 2 and 3DMark 06. Bar 3DMark06, these all run using our in-house pre-recorded timedemos in the most intense sections of each game I could find. Each setting is run three times and the average is taken, for reproducible and accurate results. I ran each game test at 1,280 x 1,024, 1,600 x 1,200, 1,920 x 1,440 and 2,048 x 1,536 each at 0x FSAA with trilinear filtering, 2x FSAA with 4x AF and 4x FSAA with 8x AF.

I was interested to see how using the PCI Express bridge affected performance, so I set two 7900 GTs to the same clock speeds as the 7950 GX2 and used the same 91.29 drivers for comparison. I also decided to test Call of Duty 2 with the SLI Optimisations turned both on and off, to see how much difference this made to the 7950 GX2 and the two 7900 GT cards, in an effort to prove that this is not just a single card.

Obviously there were a lot of results to look at, but the questions in my mind were: how does it compare to the X1900 XTX (currently the fastest single card available), how does it compare to a pair of equally clocked 7900 GTs, and how does it compare to a pair of similarly priced full-speed 7900 GTs.

I also managed to overclock this card to 600MHz on the core and 800MHz (1,600MHz effective) on the memory and these performance results are included for comparison.

Having the two GPUs on one card should fractionally improve performance over an SLI solution as there is less latency. This was in fact the case, with one or two frames per second being noticed, but certainly nothing major. In the higher resolutions however, these differences became quite large indeed – 38 fps compared to 30. That’s quite a performance difference considering how little has changed. In Counter-Strike: Source, the difference was even larger, 60 fps versus 24. This was enough to make me wonder if something fishy was going on.

I went back in to the games and decided to look for myself, as my concern was that AA was not being switched on. I was wrong. However, the level I use in Counter-Strike: Source contains HDR effects and on the 7950 GX2 card I noticed a few errors in the console when loading HDR textures. I think there is a bug in the 91.29 drivers that is causing these high results. For the best indication of 7950 GX2 performance, I would sooner you take more notice of the downclocked 7900 GTs in SLI, than the 7950 GX2 results.

In most cases, the 7950 GX2 (or simulated 7950 GX2) was faster than two full-speed 7900 GTs. I think that’s because in most cases the extra memory bandwidth isn’t needed and the increased core speed helps things a long more. However, in the highest setting of Counter-Strike: Source, the memory bandwidth was obviously needed as it attained 35 fps instead of 24, and that’s using older drivers too.

The final question, is how does this compare to the X1900 XTX? Feature wise, the X1900 XTX obviously has FSAA+HDR on its side. On the performance side however, the ball was clearly in nVidia’s court with the 7950 GX2 being on average 30 per cent faster. However, the fact that nVidia needs a second GPU to attain this is testament to the technology behind the X1900 XTX.

”’Verdict”’

The 7950 GX2 is the fastest card available for a single slot motherboard, full stop. However, calling it a single GPU is misleading and anyone considering purchasing one should be aware of its caveats – mainly that games requires an SLI profile and that it can’t run dual monitors without tediously disabling “Dual GPU” mode.

At around the £400 mark, this is a lot to pay for a graphics card and unlike running SLI, which can be split up into two manageable chunks – this must be paid for all at once. I would only consider doing so if I didn’t have an SLI motherboard, or had the full intention of upgrading to Quad SLI in the future. For most people, a single 7900 GT and then adding a second in six months time would offer better value for money.

All in all though, this is not the disappointment I thought it would be. It’s fast, quiet and the price is competitive for the performance.

Though not particularly self explanatory these graphs show the difference that having “Optimise for SLI” turned on in Call of Duty 2 makes, thus answering the question – is this a single GPU or not?

Trusted Score

Score in detail

-

Value 8

-

Features 7

-

Performance 10